Google Search Console: Couldn’t fetch | Sitemap could not be read. Why?

Recently, we’ve noticed some of our website sitemaps aren’t being processed in Google Search Console. This was unusual since our other sitemaps for the same website were processed as Success.

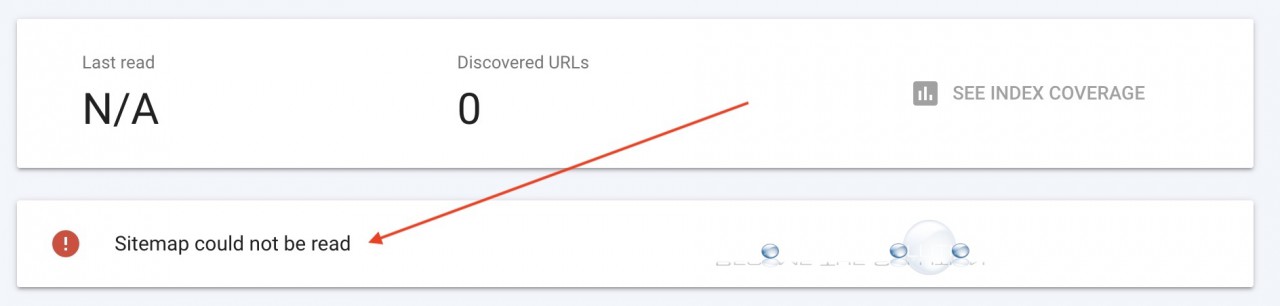

You may see the following messages in Google Search Console for your Sitemap: Couldn’t fetch or Sitemap could not be read.

Each our sitemaps contained 50,000 URL’s– the maximum URL’s a single sitemap can contain according to Google Search Central - in addition to our sitemap being under 50MB in size.

How To Verify Your Google Search Console Sitemap is Working

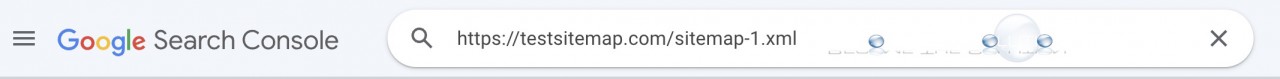

1. Open Google Search Console.

2. Paste your sitemap URL in Inspect Any URL in “site.com” and hit return.

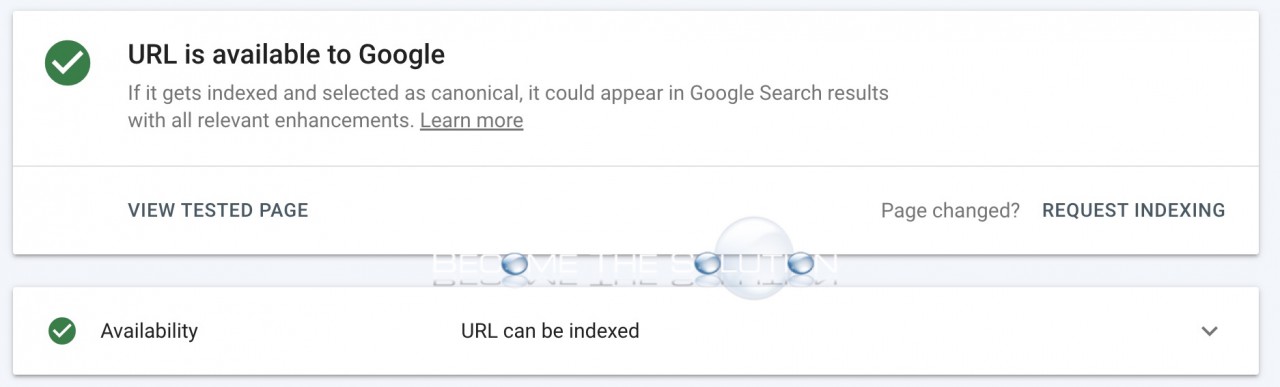

3. You should see URL is not on Google since sitemaps aren’t indexed – this is normal.

4. In the top right, click Test Live URL.

5. If your test shows URL is available to Google – then you need to do nothing else and your sitemap will be processed by Google eventually.

What we Believe is Happening

If your domain name is newer and only recently indexed in Google, Google likely will not process such a large sitemap immediately. One of our sites is indexed in Google for 10 years and has multiple sitemaps with 50,000 URL’s in each.

As you can see below, Google is crawling our sitemaps and has yet to finish crawling our newer sitemaps likely because of their larger size.

You should test your sitemaps in Google Search Console by adjusting the number of URL’s in them. Google Search Console beast practice is to limit sitemaps to have 50,000 URL’s and sitemap files to be under 50MB in size. Be sure to clear your website cache backend before resubmitting a modified sitemap if you want to force Google Search Console to re-crawl your sitemap and changes.

Finally, in our experience, Google Search Indexing just takes time. When in doubt, wait at least a week, to see if Google starts to process your sitemap files. This is especially true for newer domain names submitted to Google Index.

Comments